What is Hand Tracking in VR?

Posted; May 7, 2021

Hand tracking in VR allows you to interact without needing VR controllers.

Sensors capture data on the position, orientation, and velocity of your hands. Hand tracking software then uses this data to create a real-time virtual embodiment of them.

These virtual hands are integrated into VR applications, allowing you to see and use your hands naturally. But while the end-user experience of hand tracking in VR feels intuitive, in reality this relies on layers of sophisticated technology.

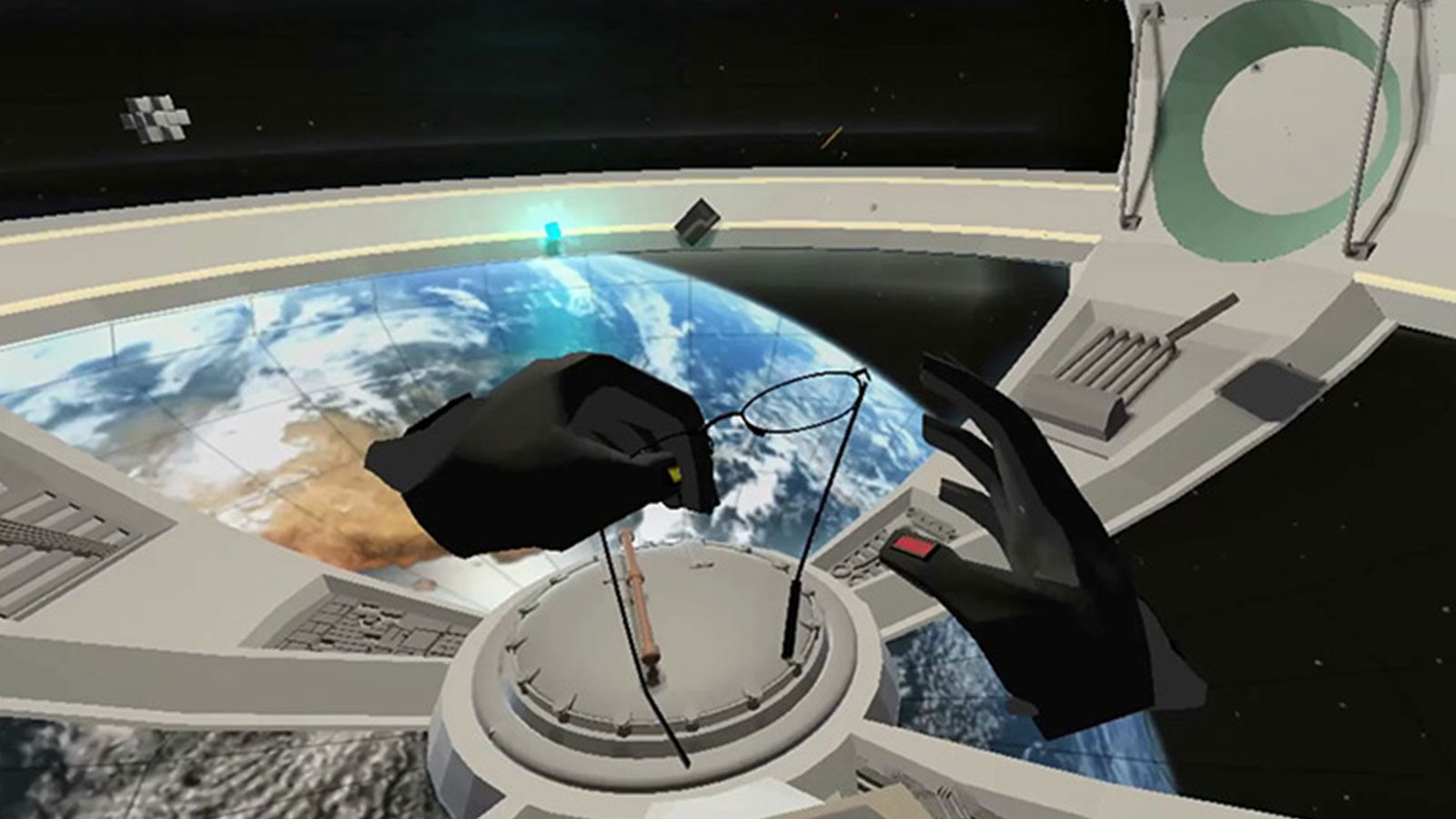

Meet your virtual hands

In this blog, we’ll explore the layers of hand tracking technology lying behind the deceptively simple interactions in one of our best-known demos: Blocks.

Being able to stack building blocks is a core milestone in child development. By solving this simple but fundamental interaction, you solve fundamental challenges in VR.

This blog will take you on a quick tour not just of hand tracking in VR (and spatial computing more generally). It'll also explore what makes for good hand tracking in VR.

What features are really important? And what do you need to consider when developing and evaluating VR applications with hand tracking?

VR hand tracking hardware: The eyes of the system

All hand tracking in VR starts with sensors, usually cameras of some sort. These are the digital eyes of the system, capturing raw data on the position, orientation, and velocity of your hands.

The cameras are attached to or, increasingly, integrated into your VR headset. In integrated systems, the inside-out cameras often double as hand tracking sensors.

No matter how good your hand tracking software, the quality of data captured by your sensors will be a limiting factor in the success of the system as a whole.

Of course, VR hand tracking sensors need to meet basic hardware performance indicators such as power consumption and physical robustness. Beyond these, gold-standard hand tracking hardware would include:

- Accurate depth perception: Often, though not always, achieved by using two cameras for a stereoscopic view.

- Field of view wider/deeper than that of the display: So that whenever your hands come into view, they are already tracked.

- Frame rate matching frame rate of VR headset: An important factor in minimizing latency. It also reduces motion blur, enabling higher-speed interactions.

- Provides own illumination: Hands illuminated only by visible light may not be bright enough to provide adequate data.

Blocks runs on Ultraleap’s Leap Motion Controller or Stereo IR 170 camera modules. While not particularly complicated on the hardware front, they’re optimized for hand tracking data capture.

Our new V5/Gemini software platform also runs on third-party hardware such as the Qualcomm XR2 reference design, and Varjo’s XR-3 and VR-3 headsets.

Ultraleap’s hand tracking modules use two infrared cameras plus infrared LEDs for illumination. The cameras and LEDs are synced. The LEDs flash up to 120 times a second, illuminating your hands. Simultaneously, the cameras take snapshots of them.

Find out more about why we use infrared in our camera modules

VR hand tracking software: The brain of the system

Once you’ve collected quality data and done some basic cleaning and processing, it’s time to use it to build a pair of virtual hands VR applications can use. Enter the tracking engine: the brain of the system.

Ultraleap’s Tracking Engine is the code that turns raw data into a real-time digital model of your hands. A tracking engine is the core of any VR hand tracking system, and where most hand tracking patents are found.

Note that you won’t see Ultraleap’s Tracking Engine as a separate item in our software downloads. This is because it’s held within our Tracking Service. The Tracking Service is a larger piece of software that manages camera hardware, runs the Tracking Engine, and sends digital hand models to applications.

The robust, realistic interactions in Blocks rely on the skeletal tracking model in our Tracking Engine. We don’t recreate inessential surface details. Instead, we recreate the fundamental structure of your hands, wrists and forearms, modelling every joint and bone. (Our tracking also conforms to OpenXR standards.)

In the new V5/Gemini platform this dynamic digital skeleton is generated by a single unified neural network. It results in even more robust hand models, with the most obvious improvements being better initialization and improved two-hand interactions.

Internally, we evaluate tracking performance using detailed qualitative and quantitative measures.

Your hands are complex structures. Evaluating the success of hand tracking software is complex too. Yet at the same time, the measures are also all different dimensions of the same basic question: how closely do your virtual hands match the position and movement of your real hands?

VR developers need hand tracking to perform consistently. To create effective VR hand tracking applications, you have to design around the minimum, not the maximum, performance of a hand tracking system.

For any given release, we test with a diverse group of users in a variety of environments to make sure the minimum performance is as good as we can make it. We prioritize elements that make the biggest difference. (It probably doesn’t matter if the tip of your pinky is sometimes a few millimetres out).

Most people don’t have access to sophisticated testing rigs. However, anyone can do a good qualitative assessment using equipment they do have: their hands, and those of their users.

What happens when you wriggle your fingers? Move your hands fast? Put one hand close to the other? Rotate your wrists? What happens when someone with smaller or larger hands does the same motions? Most importantly, what happens when you make the hand movements you want users to perform in your VR experience?

This will give you a good handle on the basics of how your hand tracking is performing, and what limitations you might need to design around.

Find out more about Gemini

Tracking development tools: An essential of component of hand tracking in VR

Once a VR application knows where a user’s hand is in space, it also needs to understand their intent: what they want to do with that hand. Does that clenched fist mean the user is grabbing an object, trying to punch it away, or is it just an unconscious hand gesture?

To add to the problem, game physics engines were never designed for human hands. Trying to grab an object, you send it flying as the physics engine desperately tries to keep your fingers out of it.

An essential component of good hand tracking in VR is a set of tools that solve basic challenges like this. Blocks is built using Ultraleap’s Interaction Engine (available in our Unity plugin). This is a layer that exists between the game engine and real-world hand physics.

By combining the pose of your hand and the shape and size of an object, it determines whether or not you’re trying to grasp it.

To make stacking blocks work in VR, it also implements an alternate set of physics rules. When you reach out to grasp a block, your fingers phase through it. But when you pile the blocks on top of one another, or poke them with a finger, they behave like solid objects.

The results would be impossible in reality, but feel natural, satisfying, and easy to use.

Find out more about the Interaction Engine

VR design guidelines: Because UX design for hand tracking is different from VR controllers

Finally, good hand tracking in VR also depends on detailed, specific design guidelines for designing with hands.

Blocks exemplifies one of our key principles of successful hand tracking UX, and why it's different to VR controllers. It's this: design primarily for direct interaction.

When you pick up a block, that’s a direct interaction. In contrast, when you turn your palm upwards to reveal the wearable menu, that’s an abstract gesture. But when you press a button on the wearable menu, we’re back to direct interaction.

The majority of Blocks is direct interaction, supplemented by a couple of useful abstract gestures.

Well-designed virtual objects are the foundation of engaging VR hand tracking experiences. You have a lifetime of familiarity with buttons and building blocks. So when they appear in Blocks, you understand what you can do with little or no instruction.

But direct interaction can also take you beyond what’s possible in the physical world. In Blocks, you spawn a new virtual building block by pinching your fingers together and moving your hands apart.

While in the physical world this would be impossible, we match the user’s mental model of how such an interaction would work if it were possible. Users learn powerful extra interactions like this quickly if they make sense in context.

Learn more about designing for hand tracking in VR

Go create!

Ultraleap’s hardware, software, tools, and design guidelines are the world’s most advanced resource for developers working with hand tracking. They’re based on 10 years and 5 generations of innovative software, over 150 patents, and a community of over 350,000 developers.

Hand tracking in VR is not a fully solved problem, but today’s technology is far more robust and effective. And the rewards of bringing hands into VR are immense.

Hand tracking is to VR what the touchscreen was to 2D computing. It's an accessible, low-friction input method that opens up new demographics and unlocks new use-cases and markets – from making VR training accessible to streamlining design workflows to deeper immersion.

We’ve done the heavy lifting, so you can focus on building the VR applications of the future. Let us know what you’re creating – and how we can help.

Move beyond

Explore our blogs, whitepapers and case studies to find out more.