What is spatial computing?

Posted; April 27, 2020

Spatial computing uses the 3D space around you as a canvas for a user interface. Computing has leapt beyond the confines of 2D screens, and our gestures and voices are now the controllers.

Spatial computing is broadly synonymous with extended reality (XR) – itself an umbrella term for virtual, augmented, and mixed reality. However, the term spatial computing highlights the way in which, in XR, the 3D space around you is the canvas for a user interface.

Why does spatial computing matter?

The human brain has evolved to deal with a three-dimensional physical environment, not 2D screens. Even the language we use to describe thought is built around physical metaphor – if you grasp my meaning.

Spatial computing taps into this deep, embodied knowledge.

In spatial computing, digital content of any sort (stories, data visualisations, mathematical concepts, impossibly large or small things such as galaxies or molecular structures) can be explored in ways that align with human cognitive capabilities. A new naturalism, true to your physical expectations, but taking you beyond the limitations of human perception and physical capability.

That’s the concept. The three core areas that need to be solved to get us to this point are:

- Technology that enables us to perceive 3D digital content (such as AR/VR headsets)

- Technology that allows us to interact naturally with 3D digital content (such as voice control, eye tracking, hand/body tracking and haptics)

- The principles of effective 3D UX design

Spatial computing and audio-visual technology

Spatial computing depends on 3D imaging techniques – AR/VR headsets, or glasses-free holographic displays. Advances in technology and computing power over the last decade mean this piece of the puzzle is well on its way to being solved.

Oculus, Varjo, Pimax, HTC Vive, PSVR, GearVR, HoloLens, VRgineers, Magic Leap, RealMax, Dimenco, Looking Glass, Sony Spatial Reality… the list goes on.

Spatial computing and interaction technology

As visual content becomes ever more authentic and three-dimensional, how we interact with it needs to follow suit.

Handheld controllers will always have a place in home gaming and for expert users. But widespread adoption of spatial computing – looking beyond gaming into industrial design, training, healthcare, theme parks, and education – will depend on naturalistic interaction.

Interaction technology #1: (Hand) tracking

Tracking, and particularly hand tracking, means you can interact directly with virtual content. You can grab, pinch, push, slide and swipe virtual objects directly, with no need to learn button presses or keyboard shortcuts.

When you pick up an object in the physical world, it’s instantaneous and effortless. The same has to be true in spatial computing. Hand tracking software has to be able to render hands realistically, in real-time with high fidelity and low latency.

Interaction technology #2: Haptics

Think of how hard it is to fasten a button while wearing a pair of gloves. That gives you a clue as to how important your sense of touch is to natural interaction.

This makes haptics a second key interaction technology for spatial computing.

Haptic feedback in AR/VR can be created through wearables (haptic gloves, vests, and suits), or Ultraleap’s own mid-air haptics – a “virtual touch” technology that creates the sensation of touch in mid-air. While we are a long way off being able to digitize touch in its entirety, even small touches of haptic feedback dramatically improve user experience.

Thoughtfully weaving haptics into AR/VR interfaces is particularly important for premium or enterprise-grade products where precise, high-quality interactions are a pivotal feature.

Interaction technology #3: Voice control

Almost as instinctual as using our bodies is using language. The number of voice-based digital assistants in the world is set to exceed the entire human population in 2021.

The ability to engage with AR or VR using both body and voice creates exponential possibilities. A classic MIT experiment from 1979, Put That There, illustrates this beautifully.

In it, the user could position an object on a screen by pointing at the spot they wanted the object to appear, and telling the computer what sort of object they wanted (“Put a yellow circle there.”). It’s an interaction completed far more easily by combining voice and hand tracking than by either alone.

Interaction technology #4: Eye tracking

Eye-tracking tech, while mostly concerned with delivering effective visuals, can also be used to boost effortless interactivity.

Headset manufacturers such as Varjo are using their ability to track where a user is looking to better understand intent. This enables them to surface relevant, contextual information such as interaction menus in a fluid, effortless way. It minimises clutter and reduces cognitive load.

Spatial computing and 3D UX design

Even if we have the technology in place, we won’t unlock the potential of spatial computing by mirroring existing 2D interaction design. Taking interfaces into 3D requires a new era of UX design that reconnects with natural human interaction.

There are some standards beginning to emerge in XR, but there is much still to be done. This is particularly the case when it comes to natural interaction. Best-practice UX principles for voice, eye tracking, hand tracking, and haptics are still a work in progress.

To explore capabilities and possibilities, broad explorations and a willingness to innovate are required. We also need an evidence-based approach that validates ideas in real products. The journey out of flatland – from 2D to 3D interfaces – will be disorienting but exciting.

Spatial computing in the wild

Although we’re still in the early stages of the journey, at Ultraleap we’re already seeing the real-world impact of spatial computing.

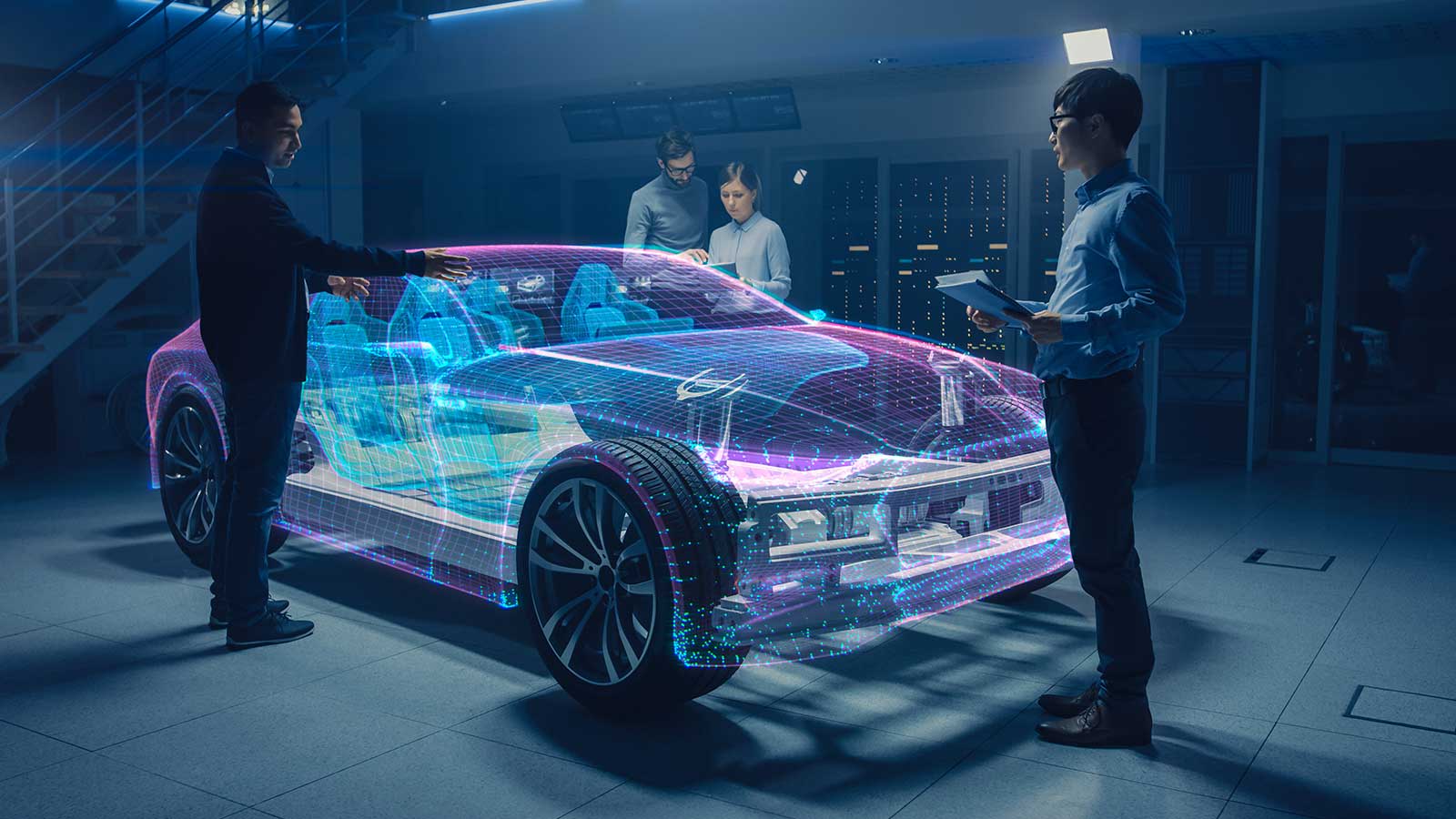

In automotive, VR and hand tracking is allowing virtual prototyping of production lines. It means expensive, full-scale 3D models are no longer needed.

In healthcare, VR-based vision therapy is improving sight and depth perception in people with amblyopia (better known as “lazy eye”). The condition is very hard to treat with conventional methods.

Location-based entertainment companies are using spatial computing to deepen immersion. The Twilight Saga-based Midnight Ride at China’s Lionsgate Entertainment World and the VOID’s hyper-VR Marvel Avengers experience use hand tracking to allow visitors to see their hands within the VR experience, reacting and moving in real-time. Fallen Planet Studios’ AFFECTED: The Visit adds a new dimension to VR horror by adding spooky tactile effects using mid-air haptics.

Once upon a time, the idea of bringing your thoughts into the world with a few spoken words or a wave of your hand was simply science fiction or magical thinking. Welcome to the new reality.