Hand Tracking in VR: Design for Direct Interaction

Posted; February 12, 2021

Our experience designing for hand tracking in VR has taught us it’s usually (though not always) better to design for direct interaction rather than abstract poses or gestures. Matt Corrall, Design Director, digs into the why and the how of this key design principle.

Designing for hands in VR is different to designing for controllers. Recently, we launched the latest version of our XR design guidelines, a toolkit for anyone prototyping or designing UX for immersive experiences with hand tracking.

Our XR design guidelines include guidance on all aspects of designing for hand tracking, from basic principles to designing menus, UI panels, and virtual objects. But underlying them all is one of our key VR design principles: It’s usually (though not always) better to design for direct interaction rather than abstract poses or gestures.

Direct interaction in VR

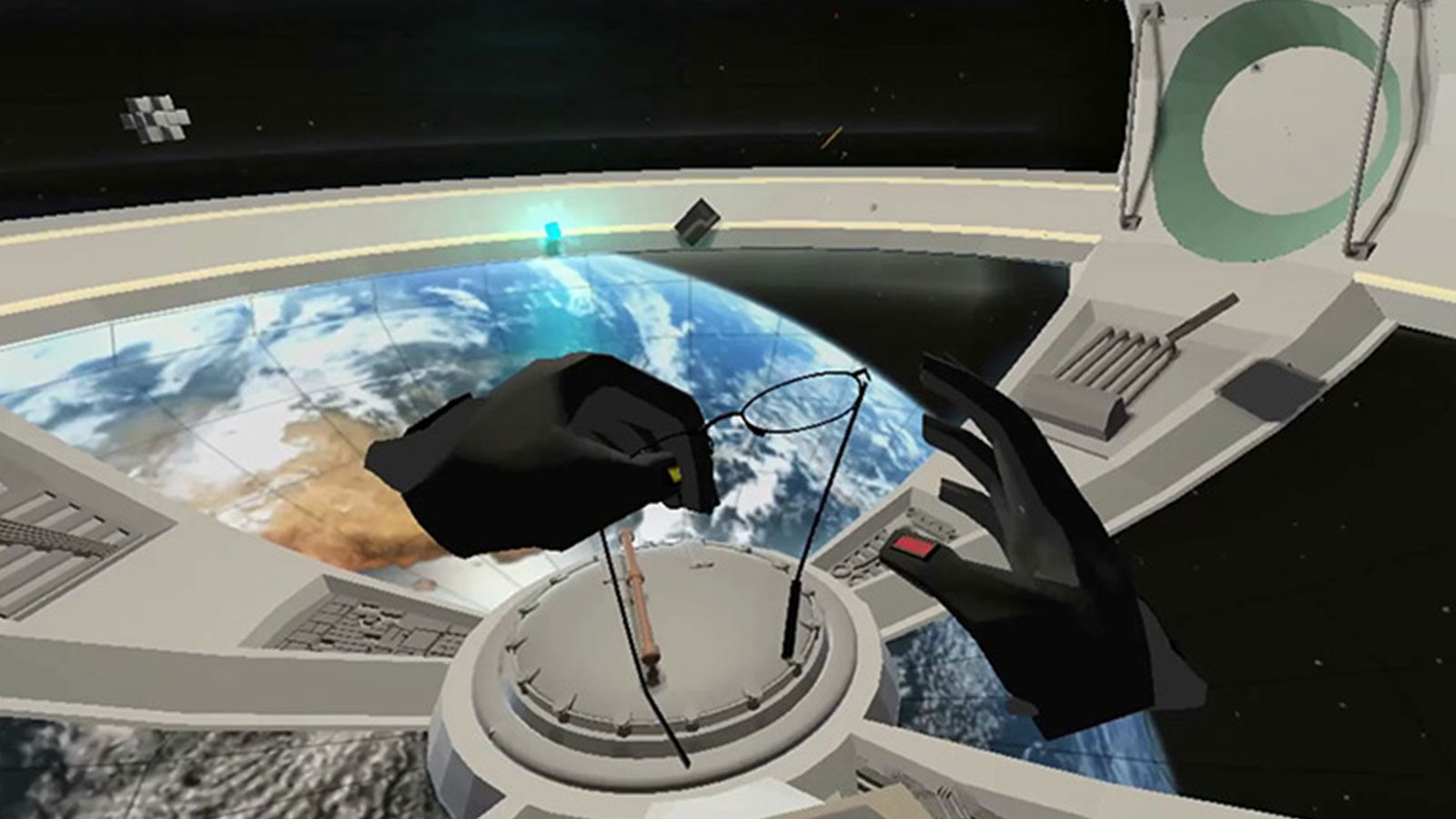

When you pick up an item in VR or push a virtual button, that’s a direct interaction. We’ve found that well–designed virtual objects are (quite literally) the building blocks of engaging hand tracking experiences.

Physical objects convey information about their use (also called affordances). When you see a doorknob, you know you can turn it. When you see a chair, you know you can sit on it.

Well-designed virtual objects provide clues about what can be done, what’s happening, and what’s about to happen. Putting grooves in the surface of a ball in VR guides users where to place their fingers to pick it up. Buttons can look like they need to be pushed, UI panel elements can look like they can be grabbed and moved.

We’ve found that classic industrial and product design principles (such as the concept of affordances) can offer many useful cues for designing objects in VR.

Users already have a lifetime of familiarity with physical objects. When virtual objects appear and behave similarly to how they would in the physical world, immersion is maintained and little or no instruction is required.

Using hand tracking, you can interact with objects naturally. They can be picked up, moved, dropped, re–sized, thrown, torn in pieces, and much more. Users can manipulate objects with a broad range of push, pinch, and grab interactions.

Paradoxically, we also use physical cues to develop VR experiences that aren’t physically possible. Actions such as re–sizing objects with your hands or storing them in infinite, virtual pockets extend users’ capabilities beyond what is possible in the real world.

Users immediately comprehend analogies to real-world counterparts. This means they can quickly grow familiar with novel, coherent interaction patterns.

Our XR Design Guidelines are an essential resource for prototyping and designing with hand tracking

Abstract poses and gestures

The direct, physical method is usually the most intuitive way to interact in VR. However, there are scenarios where abstract hand poses and gestures are appropriate and beneficial. For example, pinching the thumb and forefinger together to activate a VR drawing tool, and drawing in the air.

Abstract gestures and poses need to be taught to users when they’re introduced. They also require more cognitive effort than direct interaction.

A button or object in VR is already telling you what to do through its form and movement. In contrast, every time you use an abstract gesture, you have to pull it out of your memory without prompts from the world around you.

Gestures and poses work best with hand tracking when used for a relatively small number of actions that are valuable to the user. This makes them feel worth learning. Enabling locomotion, or bringing up a main menu UI panel, are examples of this.

We find that many VR interfaces benefit from a small number of abstract gestures (perhaps as few as one or two) to supplement direct interaction.

Hand tracking will be to VR what the touchscreen was to 2D computing

Touchscreens opened up 2D computing to new demographics, new use-cases, and vast new markets. In the same way, hand tracking opens up VR by making it accessible to people unable or unwilling to learn the complexities of interaction with controllers.

The balance between direct interaction and abstract gestures we explore in our XR design guidelines parallels the development of touchscreen user interfaces.

Touchscreens introduced an immediate, intuitive way to interact with digital content. Typing on a digital keypad, dragging and dropping an icon, using a slider to confirm a purchase – these are all examples of direct interaction.

This has then been supplemented with a relatively small number of standardized abstract gestures (swipe, pinch to zoom, etc.).

We can expect a similar pattern to play out with hand tracking interfaces in VR. Virtual interactions will be largely direct and physical. This will be supplemented by a small number of useful abstract gestures that will likely become increasingly standardized.

We’re working with other companies in the VR ecosystem on standardizing and driving adoption of a common set of gestures for VR. The adoption of good standards is one of things that helped touchscreens become ubiquitous, and we can expect the same to hold true for VR.

Accessible interaction in VR isn’t just about consumer markets

Making VR more accessible isn’t just about widening participation in mainstream consumer markets. It’s also about enterprise use-cases.

Not everyone is a gamer. There’s no guarantee a customer or time-poor C-suite executive will find button pushes intuitive. Remote collaboration, menu launchers, training, medical rehabilitation, and design reviews are all examples where low-friction interaction adds value and improves ROI.

For more information to help you prototype and develop the hand tracking interfaces that unlock new use-cases for VR, explore our detailed XR design guidelines

We’re looking forward to seeing how you use them, and what you create!

Matt Corrall is Design Director at Ultraleap.

Learn more about designing user experience for VR

Related articles

Explore our blogs, whitepapers and case studies to find out more.